Have you ever thought about how good a data model is? Whether it is possible to evaluate a data model objectively with the Data Model Scorecard?

Objectively evaluating data models and setting data quality targets is not easy. Very often, emotions play a major role when reviewing a data model. Who wants to be criticized for their data model, which is their baby? Interestingly, this is particularly difficult with data models, reports Diego Pasión from his day-to-day coaching work at FastChangeCo [1].

(The original blog post Data Model Scorecard Data Model Scorecard from 2017 can be read again here.)

Evaluating a data model

At FastChangeCo, Diego Pasión likes to ask data modeling teams the following question at the beginning of a review with the Data Model Scorecard:

"Have you ever wondered how well your data model would perform in a qualitative review? Can you imagine that your data model would achieve a success rate of 90%, 95% or even 100% in a total of ten categories with objective criteria? "

“¿No? ¿O sí?”

Regardless of whether the teams answered "no" or "yes", they should do something to check the quality of their data models, recommends Diego.

After all, testing and quality validation methods such as ISTQB, IEEE, RUP, ITIL and COBIT have been used in software development for years. There are also established test methods for loading processes, data quality and security in data warehouse projects (referred to as data solutions in the following). For data models? Rarely or never!

But why is that the case?

Diego usually answers this question as follows: "Unfortunately, the task or rather the role of the data modeler does not yet seem to have achieved the status it deserves. When I look around, I keep hearing in companies: anyone can do data modeling, it's easy, just draw a few tables in Power Point or Visio or create them in the database. Or even worse: data modeling is abstract, time-consuming or even unnecessary".

Diego usually answers this question as follows: "Unfortunately, the task or rather the role of the data modeler does not yet seem to have achieved the status it deserves. When I look around, I keep hearing in companies: anyone can do data modeling, it's easy, just draw a few tables in Power Point or Visio or create them in the database. Or even worse: data modeling is abstract, time-consuming or even unnecessary".

But that's not true!

Because data modeling is a method for representing the (enterprise) information and data landscape and at the same time a tool for communicating with business experts about business terms, their definitions and business rules (the reason for the relationships between the business terms)!

It is an art in itself to combine all parts of a data solution into a coherent whole, such as

- Facts, requirements and value chain (as a relational business data model, e.g. as an entity relationship or FCO-IM data model)

- Analytical requirements (as a dimensional business data model, e.g. as a Star Schema data model)

- Operational systems (as a relational business data model for a better understanding of the operational system)

- Reports (as a dimensional domain-oriented data model, e.g. as a domain-oriented Star Schema data model)

Business data models are at the heart of every data solution. Together with the data architecture, they determine the success or failure of a long-term project such as a data solution.

The Data Doctrine states: 'Stable data structures are more valuable and more important than stable code'. This means that even the best automation solution or data objects in a database do not necessarily create a good, sustainable and valuable data solution.

After all, what good is it if all the data is processed and stored automatically, but it does not correspond to any technical structure and therefore does not create any added value for the users of the data? Exactly: little to nothing.

For this reason, a qualitative review of all types of data models is of fundamental and essential importance for the company. FastChangeCo has recognized this, because good quality business data models that are checked with an objective scorecard add value and are of great importance to the company.

For this reason, a qualitative review of all types of data models is of fundamental and essential importance for the company. FastChangeCo has recognized this, because good quality business data models that are checked with an objective scorecard add value and are of great importance to the company.

This realization has prompted FastChangeCo to view data models as a corporate asset. Sylvia Seven, CFO of FastChangeCo, puts it in a nutshell: "Only with high-quality data models can FastChangeCo save millions of euros in the development of data solutions in the future through technology-independent reusability”[3].

The Data Model Scorecard

But how do you determine the quality of a data model? And how do you test the data model?

One possible tool for improving the quality of data models is the Data Model Scorecard® by Steve Hoberman [2].

Diego uses it both in his own projects and as a tool for objectively and independently checking data models for his customers. What he likes about the scorecard is that it is divided into 10 sections for validating a data model. And each of these sections helps in creating a data model or reviewing a data model on the way to a better data model.

The ten categories that are typically reviewed with the scorecard are

- Correctness - Does the model meet the requirements?

- Completeness - Is the model complete, but without "gold plating"?

- Schema - Does the model correspond to its schema (relational, dimensional, ensemble or NoSQL - conceptual, logical or physical)?

- Structure - Is the data model consistent, has integrity and does it follow the basic rules of data modeling?

- Abstraction - Does the data model have the right balance between generalization and specialization?

- Standards - Does the data model follow the (available) rule and naming standards?

- Readability - Is the data model presented in a readable layout?

- Definitions - Are there correct, complete and unambiguous definitions for entities and attributes?

- Consistency - Does the data model use the same structure and terminology within the organization (data model)?

- Data - Has a data profile been created and do the attributes and rules of the data model correspond to reality?

Are there challenges?

"Of course, and these should not be underestimated," Diego reports from his day-to-day project work. "Above all, this includes consistently applying the scorecard and, first and foremost, integrating it into everyday project work. During the review itself, it is always a challenge to get the necessary input to be able to verify the data models (at all). But after a few reviews, it gets better," says Diego.

A Practical Scorecard Example

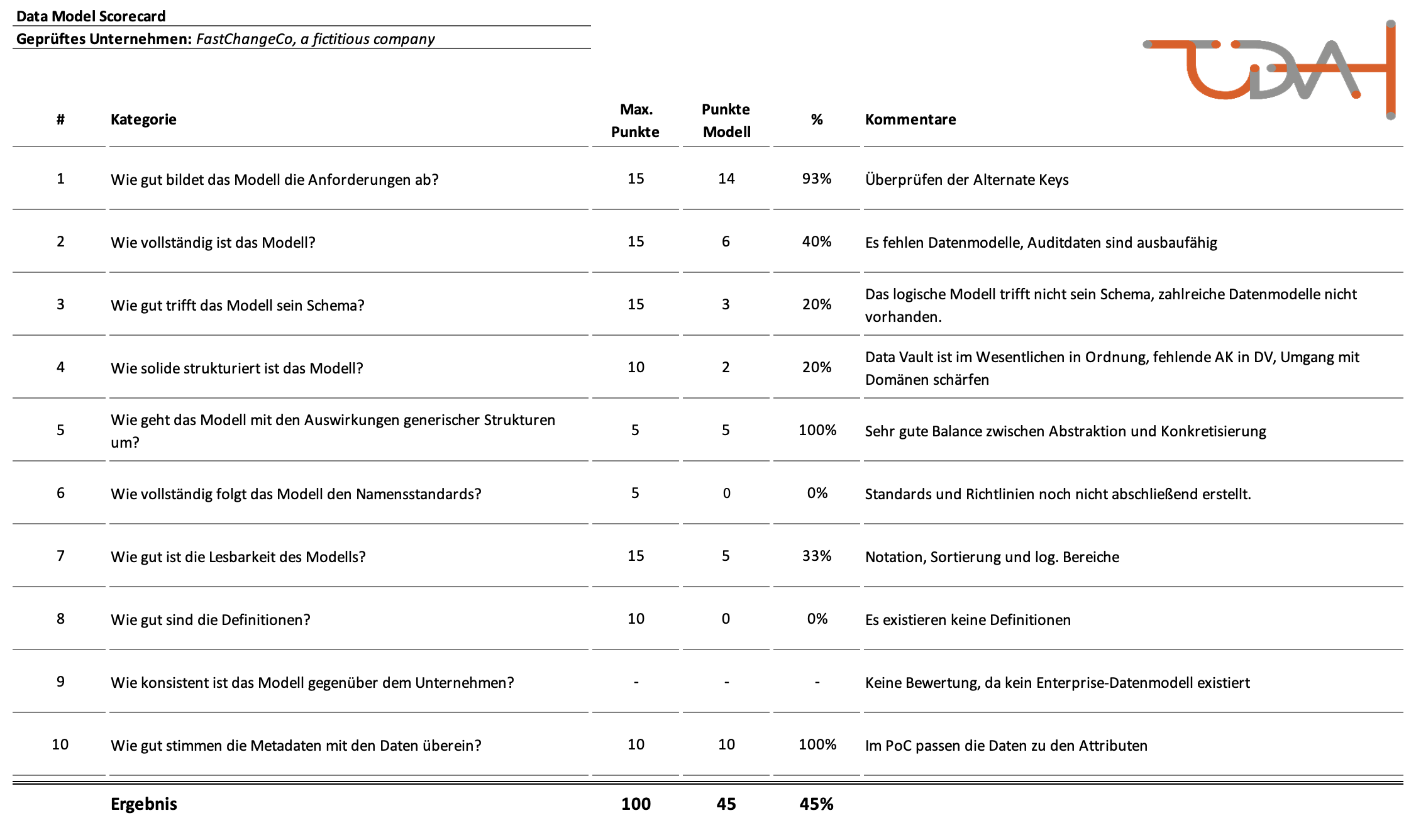

Diego shows us an example from his day-to-day project work, which illustrates the final scorecard after a review:

"Here is a scorecard I created for a client to review their data model. It was a good result from the first review. But to be honest: Achieving 90% or more takes a lot of work!"

The data model scorecard is one way of assessing the quality of a data model. Existing quality controls in the company can also be used for this purpose, or they can be taken up and integrated into the scorecard or serve as the basis for your own ideas for a customized scorecard.

It is important that the quality of the data model is "tested" in the first place and that the necessary criteria are defined.

That's it for today with a new story from the world of FastChangeCo. If you have any questions about the Data Model Scorecard®, please leave a comment below or send me a message.

See you soon,

Dirk

[1] Diego Pasión eine fiktive Figur in der fiktiven Welt von FastChangeCoTM

[2] S. Hoberman, Data Modeling Scorecard, Technics Publication, LLC, 2015.

[3] Sylvia Seven, CFO, eine fiktive Figur in der fiktiven Welt von FastChangeCoTM.