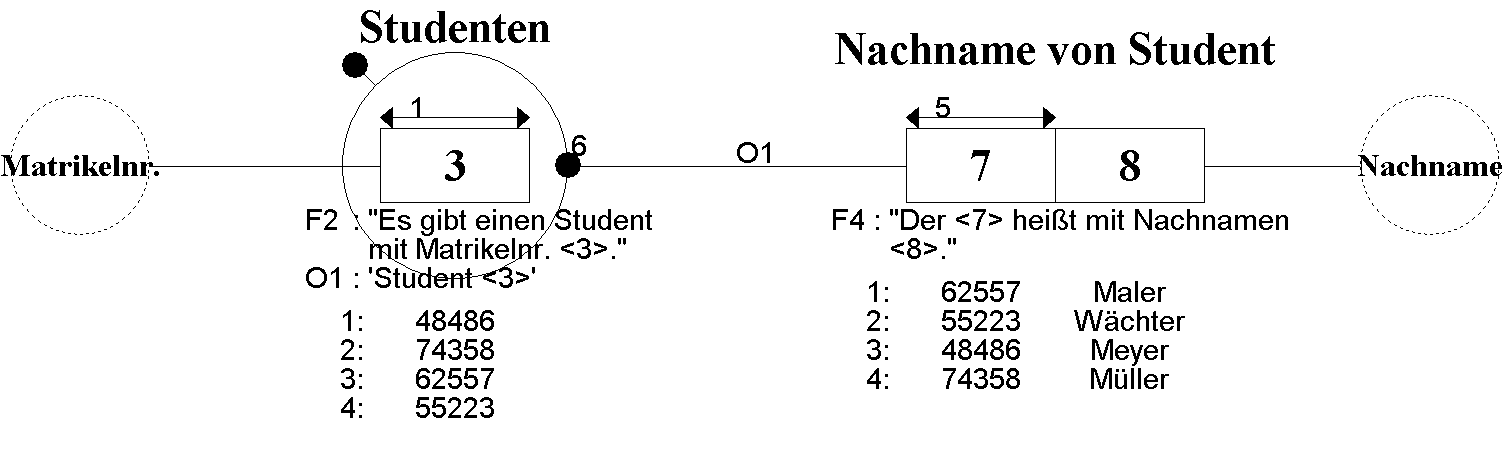

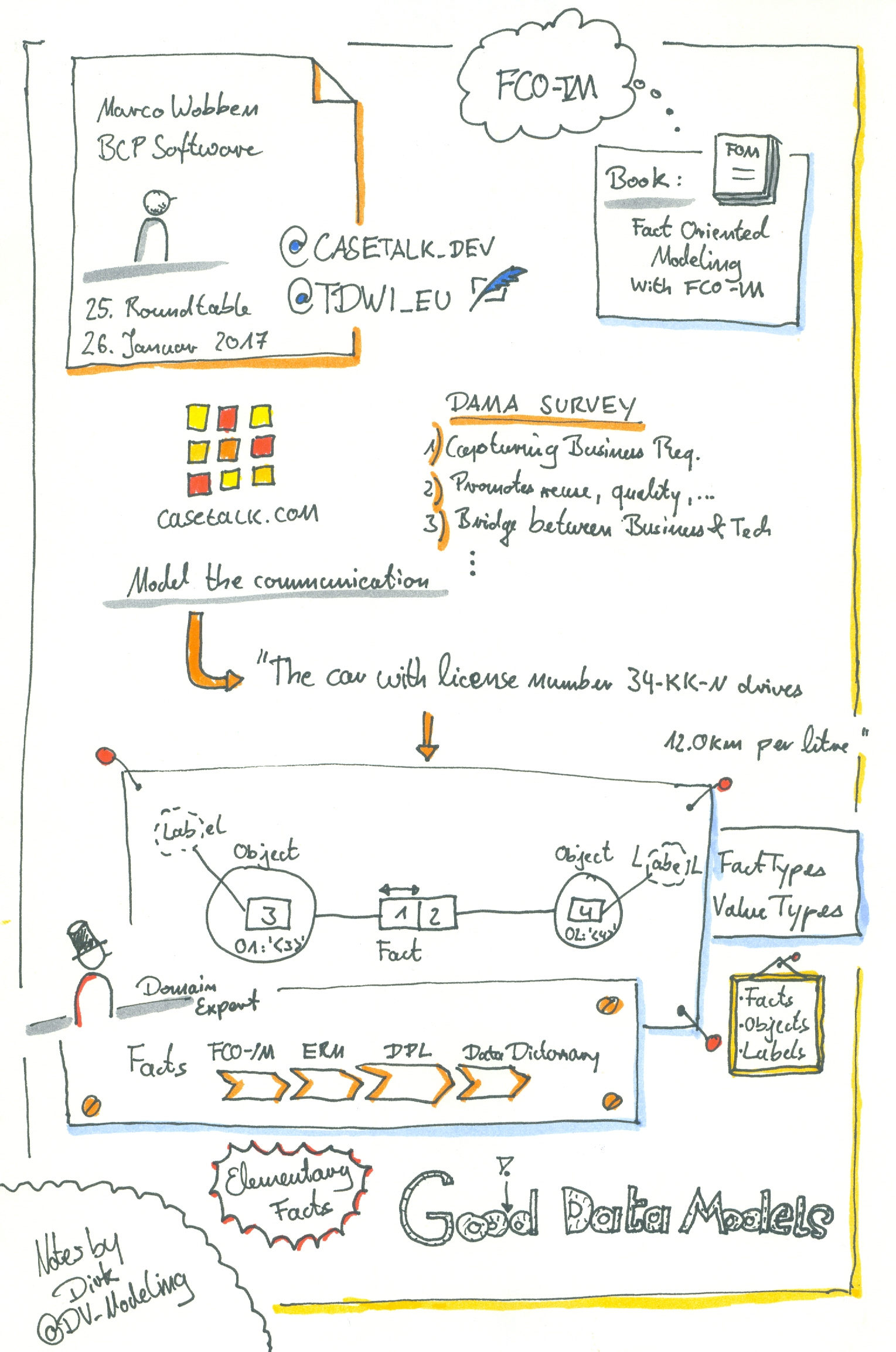

Months ago I talked to Stephan Volkmann, the student I mentor, about possibilities to write a seminar paper. One suggestion was to write about Information Modeling, namely FCO-IM, ORM2 and NIAM, siblings of the Fact-Orietented Modeling (FOM) family. In my opinion, FOM is the most powerful technique for building conceptual information models, as I wrote in a previous blogpost Sketch Notes Reflections at TDWI Roundtable with FCO-IM.

The company FastChangeCo is a fictitious company I developed in 2015 to illustrate problems, challenges and opportunities our customer had in their projects. I used FastChangeCo successfully during my talks, workshops, trainings, coaching and projects.

FastChangeCo is a company founded in the early 20th century. Today, we would say it was a Start Up. The founder, Mr. Fast, had great ideas for building assembly lines which came up in the early 20th century used by Ford and others. During the following decades his company grew to become a big player in the nineties of the last century.

Nowadays, FastChangeCo has thousands of employees and thousands of products in different industries and billions of revenue.

Objective review and data quality goals of data models

Did you ever ask yourself which score your data model would achieve? Could you imagine 90%, 95% or even 100% across 10 categories of objective criteria?

No?

Yes?

Either way, if you answered with “no” or “yes”, recommend using something to test the quality of your data model(s). For years there have been methods to test and ensure quality in software development, like ISTQB, IEEE, RUP, ITIL, COBIT and many more. In data warehouse projects I observed test methods testing everything: loading processes (ETL), data quality, organizational processes, security, …

But data models? Never! But why?

Message: Thank you for signing The Data Doctrine!

What a fantastic moment. I’ve just signed The Data Doctrine. What is the data doctrine? In a similar philosophy to the Agile Manifesto it offers us data geeks a data-centric culture:

Value Data Programmes1 Preceding Software Projects

Value Stable Data Structures Preceding Stable Code

Value Shared Data Preceding Completed Software

Value Reusable Data Preceding Reusable Code

While reading the data doctrine I saw myself looking around seeing all the lost options and possibilities in data warehouse projects because of companies, project teams, or even individuals ignoring the value of data by incurring the consequences. I saw it in data warehouse projects, struggling with the lack of stable data structures in source systems as well as in the data warehouse. In a new fancy system, where no one cares about which, what and how data was generated. And for a data warehouse project even worse, is the practice of keeping data locked with access limited to a few principalities of departments castles.

All this is not the way to get value out of corporate data, and to leverage it for value creation.

As I advocate flexible, lean and easily extendable data warehouse principles and practices, I’ll support the idea of The Data Doctrine to evolve the understanding for the need of data architecture as well as of data-centric principles.

So long,

Dirk

1 To emphasize the point, we (the authors of The Data Doctrine) use the British spelling of “programme” to reinforce the difference between a data programme, which is a set of structured activities and a software program, which is a set of instructions that tell a computer what to do (Wikipedia, 2016).

Our 25th anniversary roundtable in Frankfurt with FCO-IM was a great success. Almost 90 registrations and more than 60 attendees is an unexpected outcome for a topic that is almost unknown in Germany. If you want to know what happened at the roundtable, read about it in my previous blog post FCO-IM at TDWI Roundtable in Frankfurt.

A conference for the data-driven generation!

It’s late October 2016, an incredible crowd of young data-driven peeps are on their way to Berlin, looking forward to meet many other peeps with the same attitude at the Data Natives conference: Doing business with data or seeing a huge value in using data for the future. Besides the crowd I was not only impressed by the location but also by the amount of startups at the conference.

The schedule for two days was full packed with talks and it wasn’t easy to choose between all these interesting topics. So I decided not to give myself too much pressure. Instead I cruised through the program, and stumbled on some highlights.

In July 2016 Mathias Brink and I had given a webinar how to implement Data Vault on a EXASOL database. Read more about in my previous blogpost or watch the recording on Youtube.

Afterward I became a lot of questions per our webinar. I’ll now answer all questions I got till today. If you have further more questions feel free to ask via my contact page,via Twitter, or write a comment right here.

Page 6 of 12