Data Warehouse

-

Data Vault - Datenmodellierung noch notwendig?

Wie bereits in meinem Blogpost Modellierung oder Business Rule beschrieben ist es notwendig sich bei der Datenmodellierung über Geschäftsobjekte, die Wertschöpfungskette, fachliche Details und die Methodik des Modellierens einige Gedanken zu machen.

Oder doch nicht? Kann ich mit Data Vault einfach loslegen? Schließlich ist Data Vault auf den ersten Blick ganz einfach. Drei Objekte: HUBs, LINKs und SAT(elliten), einem einfachen Vorgehensmodell und ein paar wenige Regeln. Brauche ich für Data Vault noch die Datenmodellierung?

-

Data Vault heißt die moderne Antwort

Neue Wege in der Datenmodellierung

Geschäftsanforderungen oder Business Needs verändern sich in heutigen Unternehmen in sehr kurzen Intervallen. Was gestern noch als gesetzt galt, kann morgen schon wieder vorbei sein. Fachabteilungen fordern in immer kürzeren Abständen die Bereitstellung geeigneter Daten, um Entscheidungen zu treffen. Feste und starre Regeln werden bewusst gebrochen, um etwas Neues zu entdecken. -

Data Warehouse Automation: Erwartungen, Anbieter-Versprechen und Realität

Wie können wiederkehrende Aufgaben vereinfacht werden? Die Frage nach der Automatisierung von Prozessen in einem Data Warehouse (DWH) beschäftigt Projektteams.

-

Data Warehouse automation: Key insights, benefits & real-world challenges

How can repeating tasks be simplified? The question of automating processes in a data warehouse (DWH) keeps project teams busy.

-

DBMS resource file

PowerDesigner offers the possibility to edit the properties for a database in the so-called DBMS resource files. The DMBS resource files contain all information for PowerDesigner to generate DDL, DML and other SQL artifacts. For many databases, there are pre-built, included DBMS resource files for the different database versions.

-

DBMS-Ressourcendatei

PowerDesigner bietet die Möglichkeit, die Eigenschaften für eine Datenbank in der sogenannten DBMS-Ressourcendateien zu bearbeiten. Die DMBS-Ressourcendateien enthalten alle Informationen für den PowerDesigner, um DDL, DML und andere SQL Artefakte zu generieren. Für viele Datenbanken gibt es vorgefertigte, mitgelieferte DBMS-Ressourcendateien für die unterschiedlichen Datenbankversionen.

-

Die Bedeutung von bitemporalen Daten

Ich war sehr zufrieden mit Dirk Lerners Temporal Data in a Fast-Changing World! Training. Die Schulung hat alle meine Fragen zur Bitemporalität beantwortet, die in anderen Data-Vault-Schulungen nicht so klar angesprochen wurden.

-

DMZ Europe 2016

This year’s European Data Modeling Zone (DMZ) will take place at the wonderful German capital Berlin and I’m very happy to be again speaker at this great event! This year I’ll speak about how to start with a conceptual model, using a logical model and finally how to model the physical Data Vault. During this session we will do some exercises (no, no push-ups!!) to bring our brains up and running about modeling.

-

Enterprise Data & BI & Analytics 19 #EDBIA

I am pleased to say that I will be participating at this year’s Enterprise Data & Business Intelligence and Analytics Conference Europe 18-22 November 2019, London. I will be speaking on the subject ‘From Conceptual to Physical Data Vault Data Model’ and (for sure) on my hobby horse subject temporal data: ‘Send Bi-Temporal Data from Ground to Vault to the Stars’. See my abstracts for the sessions below.

-

Erfolg mit bitemporalem Wissen

Ich hatte gerade bei einem neuen Kunden angefangen und war Teil des Data-Warehouse-Teams, das für den Aufbau des Data Warehouse mit Data Vault verantwortlich war. Wir hatten einen Data Vault-Generator entwickelt. Damals verwendeten wir ein Enddatum für die 'business timeline'. Ich erinnere mich, wie kompliziert es war, die alten Datensätze zu aktualisieren, und wie viel Zeit das den Server kostete. Vor allem, wenn man zwischendurch Datensätze hinzufügen wollte. Zum Beispiel um die Historie aus alten Quellen zu laden.

-

Fact-Oriented Modeling

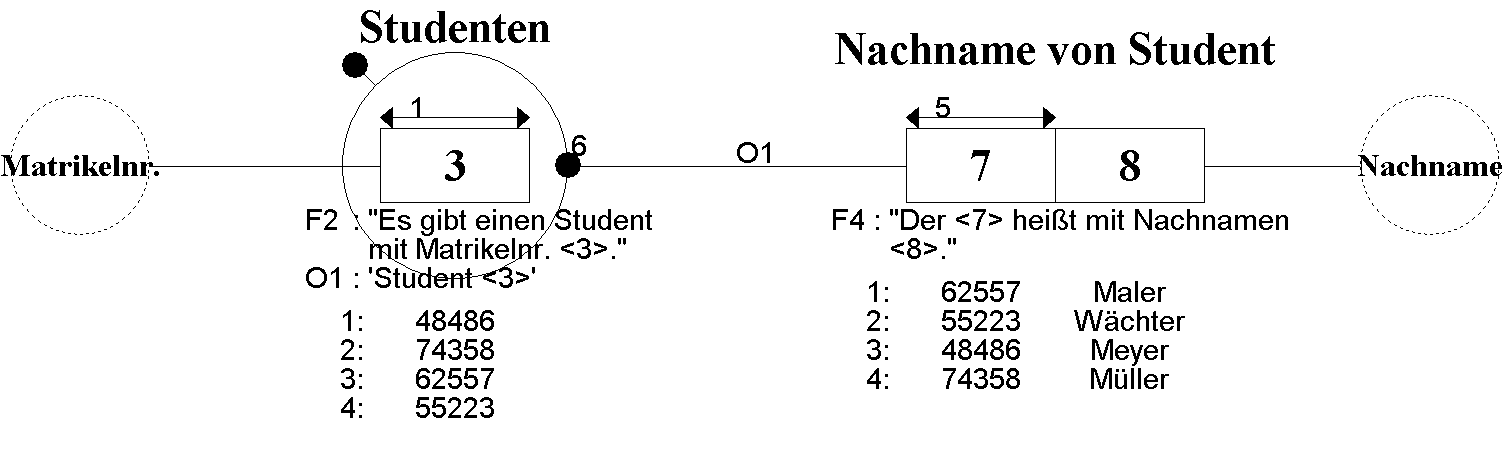

Information Modeling in Natural LanguageHow can I use natural language in my modeling process to achieve high-quality information models?

The answer to your question is Fact-Oriented Modeling (FOM). This is a family of conceptual methods in which facts are modeled precisely as relationships with an arbitrary number of arguments. This type of modeling makes it easier to understand the model, because natural language is used to create the data model. That's what makes FOM fundamentally different from all other modeling methods. This approach sounds new and exciting. However, its basic features date back to the 1970s.

-

Fact-Oriented Modeling (FOM) - Family, History and Differences

Months ago I talked to Stephan Volkmann, the student I mentor, about possibilities to write a seminar paper. One suggestion was to write about Information Modeling, namely FCO-IM, ORM2 and NIAM, siblings of the Fact-Orietented Modeling (FOM) family. In my opinion, FOM is the most powerful technique for building conceptual information models, as I wrote in a previous blogpost Sketch Notes Reflections at TDWI Roundtable with FCO-IM.

-

Faktenorientierte Modellierung

Information Modeling in natürlicher SpracheWie kann ich natürliche Sprache in meinem Modellierungsprozess verwenden, um qualitativ hochwertige Informationsmodelle zu erstellen?

Die Antwort auf Ihre Frage lautet faktenorientierte Modellierung (Fact-Oriented Modeling, FOM). Dies ist eine Familie von konzeptuellen Methoden, bei denen die Fakten präzise als Beziehungen mit beliebig vielen Argumenten modelliert werden. Diese Art der Modellierung ermöglicht ein einfacheres Verständnis des Modells, da zur Erstellung des Datenmodells natürliche Sprache verwendet wird. Dies unterscheidet FOM auch grundlegend von allen anderen Modellierungsmethoden. Dieser Ansatz hört sich neu und spannend an. Er geht jedoch in Grundzügen bereits in die 1970er-Jahre des vorigen Jahrhunderts zurück.

-

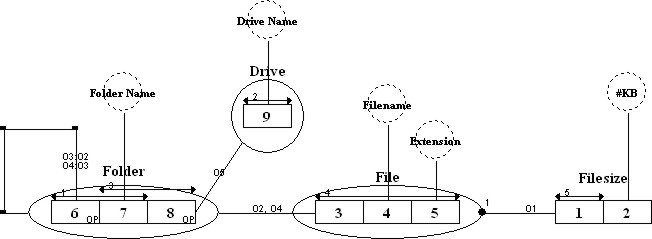

FCO-IM at TDWI Roundtable

FCO-IM - Data Modeling by Example

Do You want to visit a presentation about Fully Communication Oriented Information Modeling (FCO-IM) in Frankfurt?

I’m very proud that we, the board of the TDWI Roundtable FFM, could win Marco Wobben to speak about FCO-IM. In my opinion, it’s one of the most powerful technique for building conceptual information models. And the best is, that such models can be automatically transformed into ERM, UML, Relational or Dimensional models and much more. So we can gain more wisdom in data modeling at all.But, what is information modeling? Information modeling is making a model of the language used to communicate about some specific domain of business in a more or less fixed way. This involved not only the words used but also typical phrases and patterns that combine these words into meaningful standard statements about the domain [3].

-

Flashback to the 38th TDWI Roundtable in Frankfurt

Our roundtable in Frankfurt in March started with a bang:

Marketing for BI is even lower in the priority order than documentation.

-

Follow Up Data Vault EXASOL Webinar

In July 2016 Mathias Brink and I had given a webinar how to implement Data Vault on a EXASOL database. Read more about in my previous blogpost or watch the recording on Youtube.

Afterward I became a lot of questions per our webinar. I’ll now answer all questions I got till today. If you have further more questions feel free to ask via my contact page,via Twitter, or write a comment right here.

-

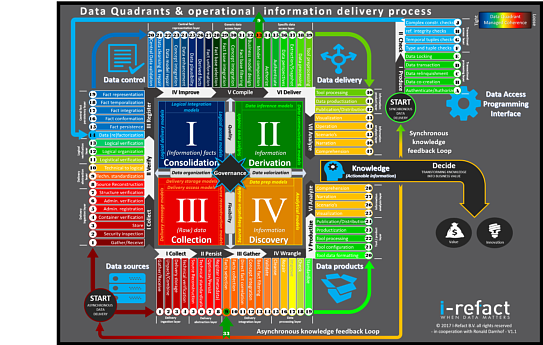

Full Scale Data Architects at DMZ 2017

As already mentioned in my previous blogpost I will give a talk at the first day of the Data Modeling Zone 2017 about temporal data in the data warehouse.

Another interesting talk will take place on the third day of the DMZ 2017: Martijn Evers will give a full day session about Full Scale Data Architects.

Ahead of this session there will be a Kickoff Event sponsored by I-Refact, data42morrow and TEDAMOH: At 6 pm on Tuesday, 24. October, after the second day of the Data Modeling Zone 2017, all interested people can meet up and join the launch of the German chapter of Full Scale Data Architects.

-

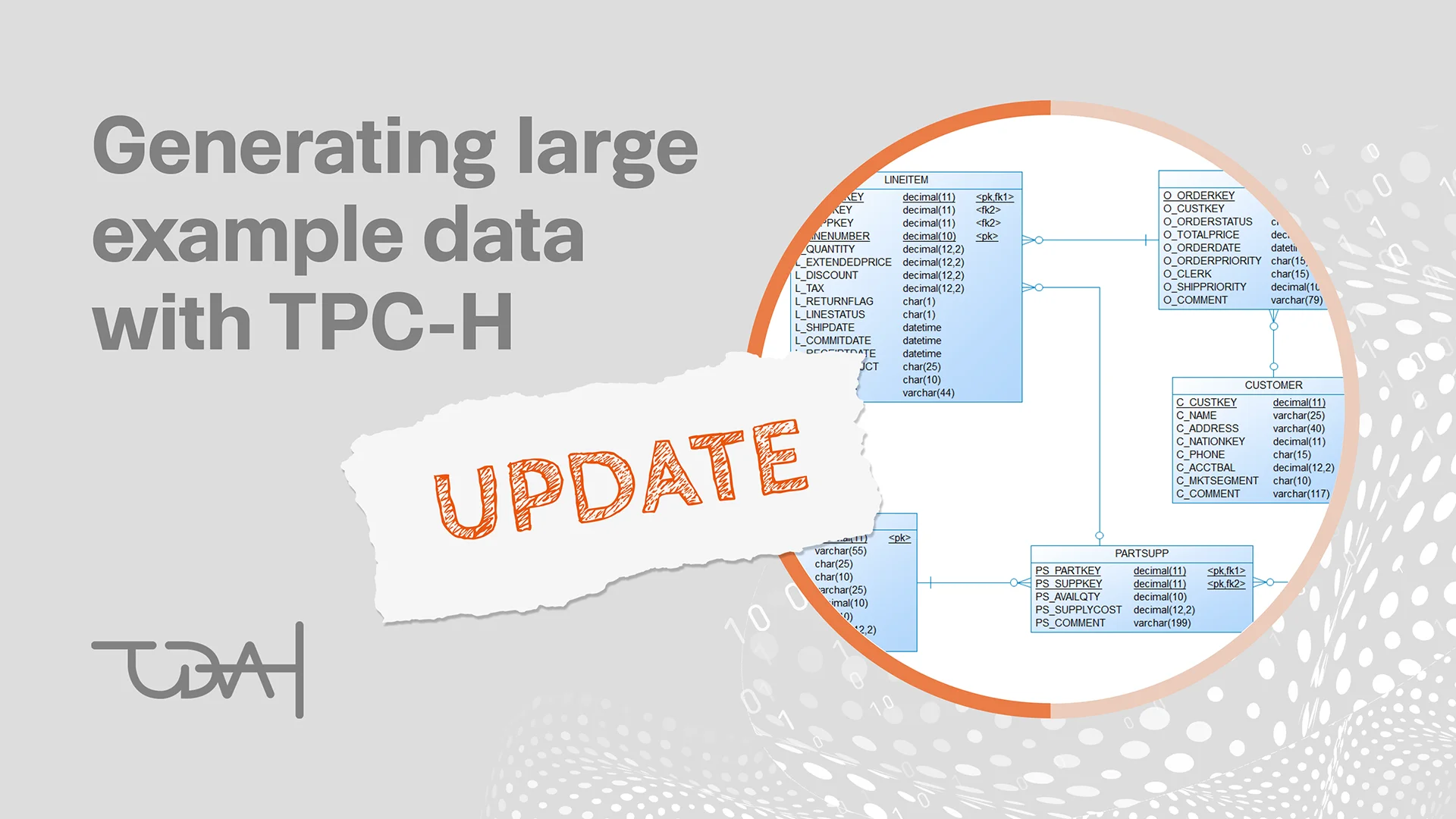

Generating large example data with TPC-H

In the past, I've always had a need for large data sets to test data logistics processes or a database technology for Data Vault.

-

Generierung von umfangreichen Beispieldaten mit TPC-H

In der Vergangenheit hatte ich immer wieder Bedarf an großen Datensätzen, um Datenlogistikprozesse oder eine Datenbanktechnologie für Data Vault zu testen.

-

High performance - Data Vault and Exasol

You may have received an e-mail invitation from EXASOL or from ITGAIN inviting you to our forthcoming webinar, such as this:

Do you have difficulty incorporating different data sources into your current database? Would you like an agile development environment? Or perhaps you are using Data Vault for data modeling and are facing performance issues?

If so, then attend our free webinar entitled “Data Vault Modeling with EXASOL: High performance and agile data warehousing.” The 60-minute webinar takes place on July 15 from 10:00 to 11:00 am CEST.